ImagenHub: Standardizing the evaluation of conditional image generation models

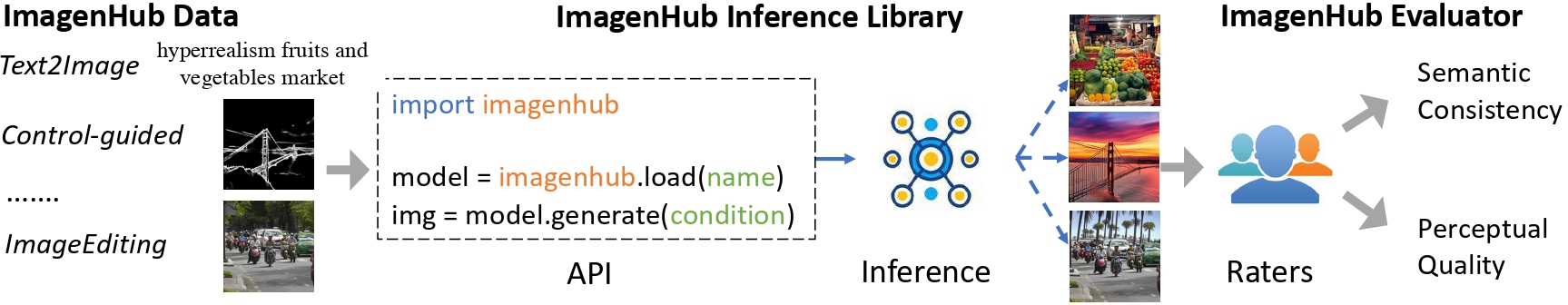

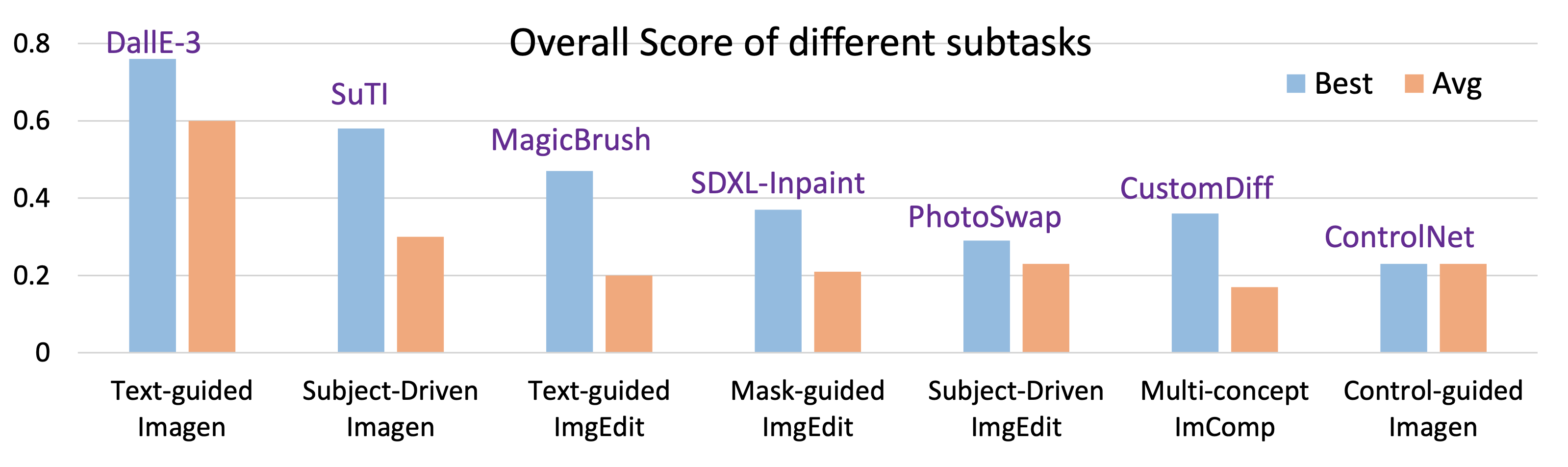

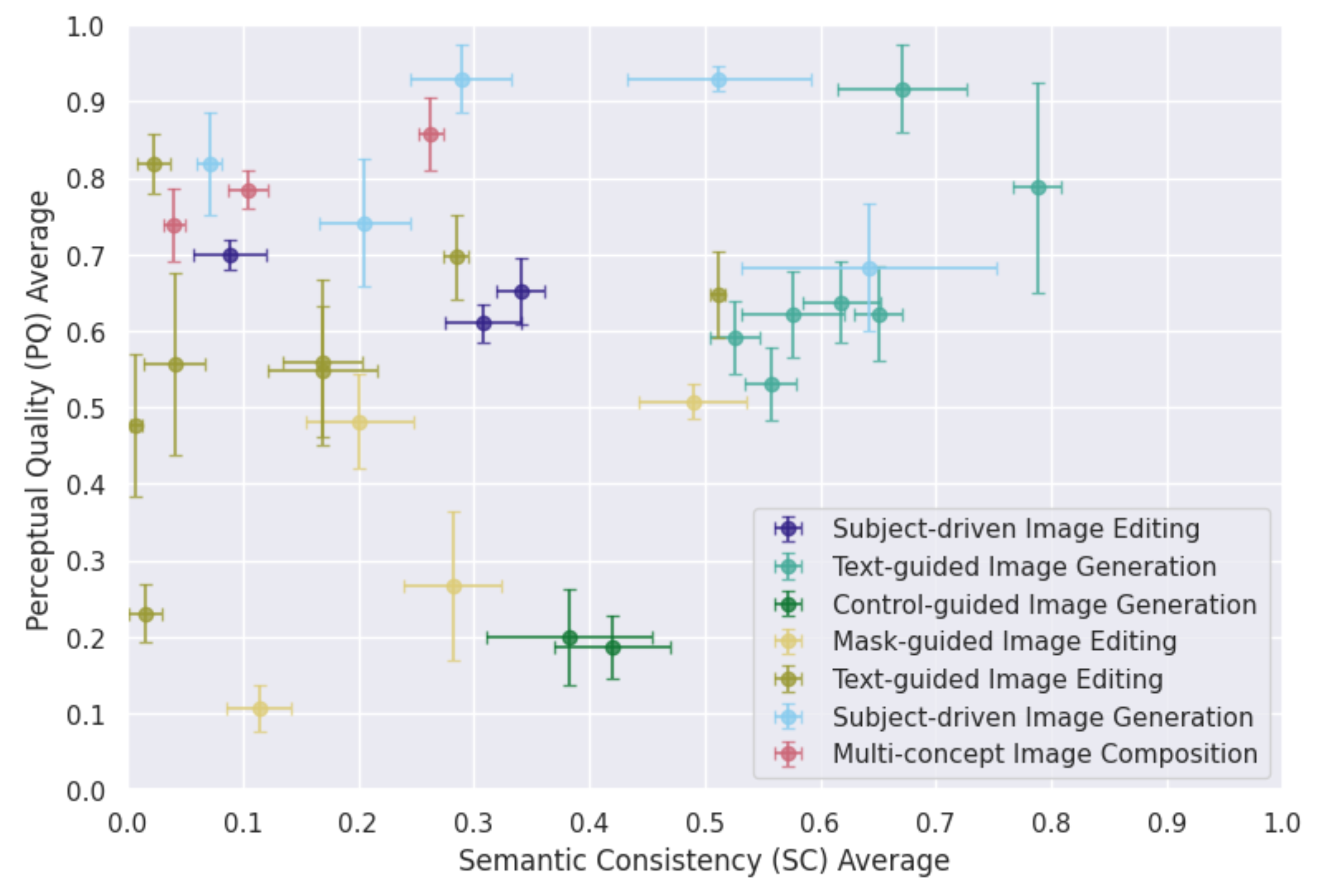

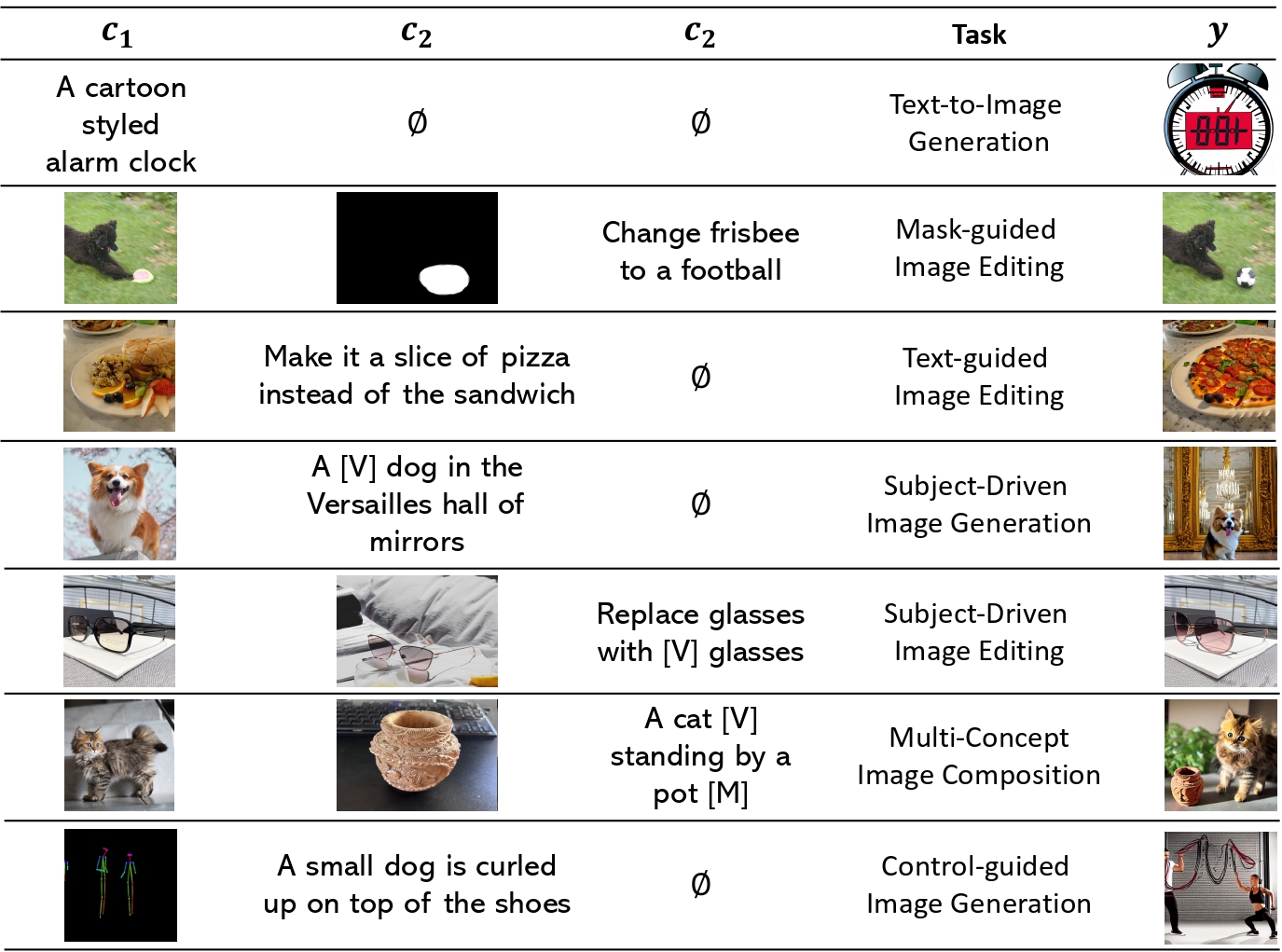

We introduce ImagenHub, a one-stop library to standardize the inference and evaluation of all the conditional image generation models. Firstly, we define seven prominent tasks and curate high-quality evaluation datasets for them. Secondly, we built a unified inference pipeline to ensure fair comparison. Thirdly, we design two human evaluation scores, i.e. Semantic Consistency and Perceptual Quality, along with comprehensive guidelines to evaluate generated images. We train expert raters to evaluate the model outputs based on the proposed metrics. Our human evaluation achieves a high inter-worker agreement of Krippendorff’s alpha on 76% models with a value higher than 0.4. We comprehensively evaluated a total of around 30 models and observed three key takeaways: (1) the existing models' performance is generally unsatisfying except for Text-guided Image Generation and Subject-driven Image Generation, with 74% models achieving an overall score lower than 0.5. (2) we examined the claims from published papers and found 83% of them hold with a few exceptions. (3) None of the existing automatic metrics has a Spearman’s correlation higher than 0.2 except subject-driven image generation. Moving forward, we will continue our efforts to evaluate newly published models and update our leaderboard to keep track of the progress in conditional image generation.

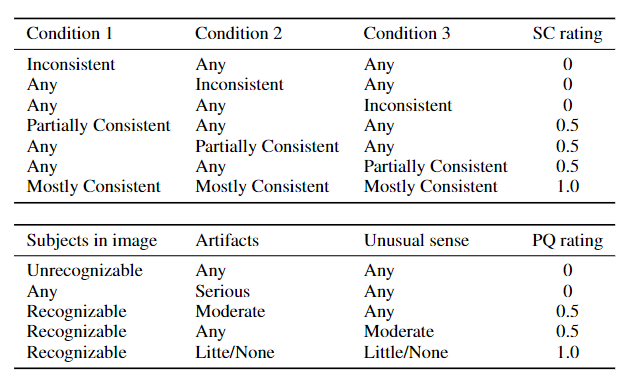

To standardize the conduction of a rigorous human evaluation, we stipulate the criteria for each measurement as follows:

| Model | SCAvg | PQAvg | Overall(O) | FleissŌ | KdŌ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Text-guided Image Generation Model | |||||||||||||

| Dalle-3 | - | Unknown | 0.79±0.02 | 0.79±0.14 | 0.76±0.08 | 0.19 | - | 0.34 | - | ||||

| Midjourney | - | Unknown | 0.67±0.06 | 0.92±0.06 | 0.73±0.07 | 0.34 | - | 0.51 | - | ||||

| DeepFloydIF | - | Unknown | 0.65±0.02 | 0.62±0.06 | 0.59±0.02 | 0.32 | - | 0.51 | - | ||||

| Stable Diffusion XL | - | Unknown | 0.62±0.03 | 0.64±0.05 | 0.59±0.03 | 0.37 | - | 0.61 | - | ||||

| Dalle-2 | - | Unknown | 0.58±0.04 | 0.62±0.06 | 0.54±0.04 | 0.27 | - | 0.40 | - | ||||

| OpenJourney | - | Unknown | 0.53±0.02 | 0.59±0.05 | 0.50±0.02 | 0.30 | - | 0.47 | - | ||||

| Stable Diffusion 2.1 | - | Unknown | 0.56±0.02 | 0.53±0.05 | 0.50±0.03 | 0.38 | - | 0.50 | - | ||||

| Mask-guided Image Editing Model | |||||||||||||

| SDXL-Inpainting | - | Unknown | 0.49±0.05 | 0.51±0.02 | 0.37±0.05 | 0.50 | - | 0.72 | - | ||||

| SD-Inpainting | - | Unknown | 0.28±0.04 | 0.27±0.10 | 0.17±0.07 | 0.31 | - | 0.49 | - | ||||

| GLIDE | - | Unknown | 0.20±0.05 | 0.48±0.06 | 0.16±0.05 | 0.33 | - | 0.56 | - | ||||

| BlendedDiffusion | - | Unknown | 0.12±0.03 | 0.11±0.03 | 0.05±0.02 | 0.36 | - | 0.44 | - | ||||

| Text-guided Image Editing Model | |||||||||||||

| MagicBrush | - | Unknown | 0.51±0.01 | 0.65±0.06 | 0.47±0.02 | 0.44 | - | 0.67 | - | ||||

| InstructPix2Pix | - | Unknown | 0.29±0.01 | 0.70±0.06 | 0.27±0.02 | 0.55 | - | 0.74 | - | ||||

| Prompt-to-prompt | - | Unknown | 0.17±0.05 | 0.55±0.09 | 0.15±0.06 | 0.36 | - | 0.53 | - | ||||

| CycleDiffusion | - | Unknown | 0.17±0.03 | 0.56±0.11 | 0.14±0.04 | 0.41 | - | 0.63 | - | ||||

| SDEdit | - | Unknown | 0.04±0.03 | 0.56±0.12 | 0.04±0.03 | 0.13 | - | 0.13 | - | ||||

| Text2Live | - | Unknown | 0.02±0.01 | 0.82±0.04 | 0.02±0.02 | 0.10 | - | 0.17 | - | ||||

| DiffEdit | - | Unknown | 0.02±0.01 | 0.23±0.04 | 0.01±0.01 | 0.24 | - | 0.24 | - | ||||

| Pix2PixZero | - | Unknown | 0.01±0.00 | 0.48±0.09 | 0.01±0.01 | 0.37 | - | 0.37 | - | ||||

| Subject-driven Image Generation Model | |||||||||||||

| SuTI | - | Unknown | 0.64±0.11 | 0.68±0.08 | 0.58±0.12 | 0.20 | - | 0.39 | - | ||||

| DreamBooth | - | Unknown | 0.51±0.08 | 0.93±0.02 | 0.55±0.11 | 0.37 | - | 0.60 | - | ||||

| BLIP-Diffusion | - | Unknown | 0.29±0.04 | 0.93±0.04 | 0.35±0.06 | 0.22 | - | 0.39 | - | ||||

| TextualInversion | - | Unknown | 0.21±0.04 | 0.74±0.08 | 0.21±0.05 | 0.35 | - | 0.52 | - | ||||

| DreamBooth-Lora | - | Unknown | 0.07±0.01 | 0.82±0.07 | 0.09±0.01 | 0.29 | - | 0.37 | - | ||||

| Subject-driven Image Editing Model | |||||||||||||

| PhotoSwap | - | Unknown | 0.34±0.02 | 0.65±0.04 | 0.36±0.02 | 0.35 | - | 0.46 | - | ||||

| DreamEdit | - | Unknown | 0.31±0.03 | 0.61±0.03 | 0.32±0.03 | 0.33 | - | 0.52 | - | ||||

| BLIP-Diffusion | - | Unknown | 0.09±0.03 | 0.70±0.02 | 0.09±0.03 | 0.41 | - | 0.47 | - | ||||

| Multi-concept Image Composition Model | |||||||||||||

| CustomDiffusion | - | Unknown | 0.26±0.01 | 0.86±0.05 | 0.29±0.01 | 0.73 | - | 0.88 | - | ||||

| DreamBooth | - | Unknown | 0.11±0.02 | 0.78±0.02 | 0.13±0.02 | 0.61 | - | 0.71 | - | ||||

| TextualInversion | - | Unknown | 0.04±0.01 | 0.74±0.05 | 0.05±0.01 | 0.62 | - | 0.77 | - | ||||

| Control-guided Image Generation Model | |||||||||||||

| ControlNet | - | Unknown | 0.42±0.05 | 0.19±0.04 | 0.23±0.04 | 0.37 | - | 0.57 | - | ||||

| UniControl | - | Unknown | 0.38±0.07 | 0.20±0.06 | 0.23±0.07 | 0.36 | - | 0.58 | - | ||||

@inproceedings{

ku2024imagenhub,

title={ImagenHub: Standardizing the evaluation of conditional image generation models},

author={Max Ku and Tianle Li and Kai Zhang and Yujie Lu and Xingyu Fu and Wenwen Zhuang and Wenhu Chen},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=OuV9ZrkQlc}

}